R Interface to clBLAS Matrix Multiplication

This post discusses the use of AMD's OpenCL BLAS library to perform matrix multiplication on a GPU.

The library is accessed via a custom R package that allows for use and comparison of various BLAS libraries. The R package is implemented for OS X 10.10 and CentOS 6.6.

Timing tests are included for an AMD HD 5770 GPU installed in a 2010 model Mac Pro (2.8 GHz), and for an AMD R9 280 CPU installed in a custom system with an i7-4790k CPU (4.0 GHz).

Installation of clBLAS on OS X

The following assumes Xcode 6.4 has already been installed.

Make a directory for the build files.

cd ~

mkdir clblas

cd clblas

Download and expand clBLAS source code archive. (Check here to verify the version number of the latest release.)

curl "https://codeload.github.com/clMathLibraries/clBLAS/tar.gz/v2.4" -o "archive.tar.gz"

tar zxvf archive.tar.gz

This code uses the CMake system to build the library. Download the Mac OS X binary. (Check here to verify the version number of the latest release.)

curl "http://www.cmake.org/files/v3.3/cmake-3.3.0-rc3-Darwin-x86_64.dmg" -o "cmake.dmg"

Open the cmake.dmg file and drag the CMake application to the Applications folder.

Open the Applications folder and double-click CMake.

- Click the Browse Source... button and choose the

clblas/clBLAS-2.4/srcfolder. - Select the text in the box to the right of Where is the source code: and paste it into the box labeled Where to build the binaries, and then change

clBLAS-2.4/srctoclBLAS-2.4/bin - Click the Configure button.

- When asked, "Build directory does not exist, should I create it?" click Yes.

- When asked, "Specify the generator for this project," select XCode.

- Make sure "Use default native compilers" is selected.

- Click the Done button.

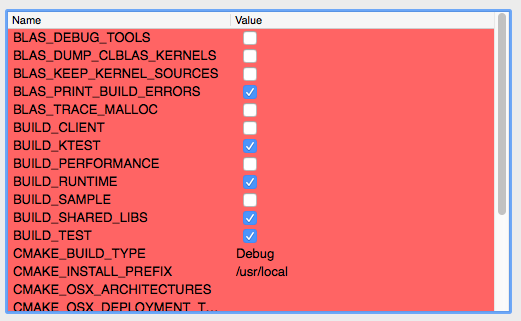

CMake will think for a while and then show these settings in the main window:

Uncheck the following boxes:

BUILD_KTESTBUILD_TESTCORR_TEST_WITH_ACML

Make sure these (and only these) boxes are still checked:

BLAS_PRINT_BUILD_ERRORSBUILD_RUNTIMEBUILD_SHARED_LIBS

Edit the following text values:

- Set

CMAKE_INSTALL_PREFIXto/usr/local/clblas - Set

CMAKE_BUILD_TYPEtoRelease

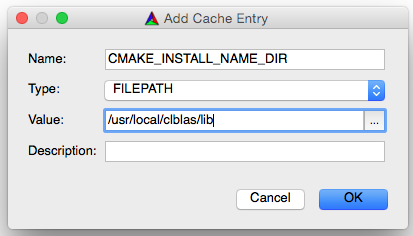

One additional parameter is needed for the build to work. Click the Add Entry button:

Fill in the dialog as follows:

Click the Configure button. When CMake is done, the settings background should no longer be red.

- The clBLAS ktest requires boost to be installed

- The clBLAS client requires boost to be installed

- MACOSX_RPATH is not specified...

Click the Generate button. Quit CMake.

To experiment with different CMake choices, the safest route is:

- Trash the

clBLAS-2.4directory. - Re-expand the source code archive.

- Re-launch CMake and choose Delete Cache from the File menu.

Next, create a folder for the shared library, and change the ownership to your user name.

sudo mkdir -p /usr/local/clblas

sudo chown michael /usr/local/clblas

In the Finder, navigate to the folder clblas/clBLAS-2.4/bin; launch Xcode by double-clicking clBLAS.xcodeproj.

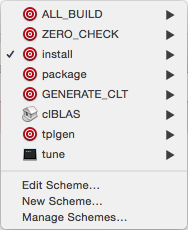

Set the popup in the upper left corner to install.

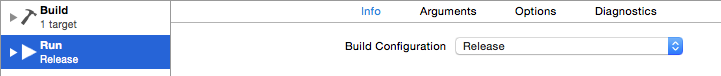

Then, use the same popup to select Edit Scheme....

Set the value for Run: Info: Build Configuration to Release.

In the Product menu, choose Build. Expect to to see numerous warnings.

Verify that the library has been installed.

ls /usr/local/clblas/lib

Verify that the library has the correct installation location.

otool -L /usr/local/clblas/lib/libclBLAS.2.4.0.dylib

The first two lines of otool output should look like this:

/usr/local/clblas/lib/libclBLAS.2.4.0.dylib:

/usr/local/clblas/lib/libclBLAS.2.dylib (compatibility version 2.0.0, current version 2.4.0)

Installation of clBLAS on CentOS 6

The following assumes an appropriate AMD GPU and OpenCL SDK have already been installed; see Personal Linux R Server: Part IV.

Download, expand, and copy the clBLAS library binary. (Check here to verify the version number of the latest release.)

cd ~

mkdir clblas

cd ~/clblas

wget https://github.com/clMathLibraries/clBLAS/releases/download/v2.4/clBLAS-2.4.0-Linux-x64.tar.gz

tar -zxvf clBLAS-2.4.0-Linux-x64.tar.gz

sudo mv clBLAS-2.4.0-Linux-x64 /opt

Create a file to add clblas to the library search path:

sudo sh -c 'echo "/opt/clBLAS-2.4.0-Linux-x64/lib64" >> /etc/ld.so.conf.d/clBLAS-2.4.0-Linux-x64.conf'

Rebuild links and cache for shared libraries.

sudo ldconfig

Installation of multiblas R Package on OS X

The following assumes clBLAS has been installed as described above.

Download the multiblas package.

cd ~/Downloads

curl -OL https://github.com/quadrivio/multiblas/releases/download/0.90-1/multiblas_0.90-1.tar.gz

Launch R and install package.

setwd("~/Downloads")

Sys.setenv(PKG_LIBS = "-framework OpenCL -L/usr/local/clblas/lib/ -lclBLAS")

Sys.setenv(PKG_CPPFLAGS = "-I/usr/local/clblas/include")

install.packages("multiblas_0.90-1.tar.gz", repos = NULL, type = "source")

library(multiblas)

Installation of multiblas R Package on Centos 6

The following assumes clBLAS has been installed as described above.

Download the multiblas package.

cd ~/Downloads

curl -OL https://github.com/quadrivio/multiblas/releases/download/0.90-1/multiblas_0.90-1.tar.gz

Launch R and install package.

setwd("~/Documents")

Sys.setenv(PKG_CPPFLAGS = "-I/usr/lib64/openblas/include -I/opt/AMDAPPSDK-3.0-0-Beta/include -Dnullptr='NULL' -I/opt/clBLAS-2.4.0-Linux-x64/include")

Sys.setenv(PKG_LIBS = "$(BLAS_LIBS) -L/opt/AMDAPPSDK-3.0-0-Beta/lib/x86_64 -lOpenCL -L/opt/clBLAS-2.4.0-Linux-x64/lib64 -lclBLAS")

install.packages("multiblas_0.90-1.tar.gz", repos = NULL, type = "source")library(multiblas)

Usage of multiblas

The purpose of this package is to make it easy to compare and use alternate implementations of BLAS matrix-multiplication functions.

The function blas.lib() creates an object containing a set of functions for a particular implementation. For example, the following creates a library using functions implemented in the R language itself:

base <- blas.lib(type="Base")

The following creates a library using the FORTRAN API to the BLAS library in use by R. (This is always available, but supplies double-precision functions only.)

lib <- blas.lib(type="R")

The following creates a library using the C API to the BLAS library in use by R. (This is always available for double-precision functions; it may or may not be available for single-precision functions, depending on the particular BLAS library in use.)

lib <- blas.lib(type="C")

The following creates a library using calls to a naive C implementation (i.e., slow but easy-to-understand).

lib <- blas.lib(type="naive")

The following creates a library using calls to the clBLAS library using the default GPU device.

lib <- blas.lib(type="clblas", processor="GPU")

Functions are available as attributes of the library object. If lib is the object produced by any of the above calls to blas.lib(), then the functions may be used as follows:

lib$crossprod(a)

# equivalent to R code:

# crossprod function

# function(x) {

# crossprod(x)

# }

#

lib$gemm(A, B, transposeA, transposeB, C, alpha, beta)

# equivalent to R code:

# gemm: general matrix-multiplication function

# function(A, B, transposeA = FALSE, transposeB = FALSE, C = NA, alpha = 1.0, beta = 0.0) {

# if (transposeA) A <- t(A)

# if (transposeB) B <- t(B)

# if (is.na(C[1])) C <- 0.0

#

# result <- alpha * A %*% B + beta * C

# return(result)

# }

This allows for convenient testing of the performance and accuracy of different implementations, by placing test code in a function that takes a library object as input. The test function code can be written once, and then called with different libraries.

Implementation

As of now, only the crossprod() and gemm() functions are implemented. The gemm() function is the most general matrix-multiplication function in the BLAS library, and thus serves as a useful example. The crossprod() function appears in a commonly-used R performance benchmark, and thus it may be useful in comparing standard results with results from optimized implementations.

The library crossprod() function is only implemented for the special case where one argument is supplied. The built-in R function takes one or two arguments and has behavior equivalent to the following:

crossprod <- function(x, y = NULL) {

if (is.null(y) {

return(t(x) %*% x)

} else {

return(t(x) %*% y)

}

}

When only one argument is supplied, the result is a symmetric matrix, which allows for the use of the optimized syrk BLAS function.

Timing Results

Configuration

The test systems' hardware and software configurations are described in more detail in previous posts:

- Personal Linux R Server in a Mini-ITX Gaming Case (Part I)

- Personal Linux R Server (Part II: Software Setup)

- Personal Linux R Server (Part III: Leveling Up)

- Personal Linux R Server (Part IV: Final Upgrades)

- Improved R Performance with OpenBLAS and vecLib Libraries

GFLOPS calculation

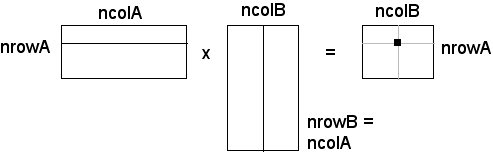

For general matrix multiplication, the resulting matrix contains ncolB x nrowA values, and each value is the result of the dot product of two vectors of length ncolA. Each dot product requires 2 x ncolA floating operations (one multiplication plus one addition, multiplied by the length), so the total number of operations is 2 x ncolA x ncolB x nrowA.

Because the crossprod() function with only one argument produces a result that is symmetrical, it is only necessary to calculate the upper or lower triangular result. The resulting matrix has dimensions ncolB x ncolB (since, in this case, the A matrix is the transpose of B), and the dot product vector has length nrowB. The number of upper triangular values is ncolB x 0.5 * (1 + ncolB). The total number of operations is, therefore, nrowB x ncolB x (1 + ncolB).

Results Summary

- The test systems were: 1) 2.8 GHz 2010 Mac Pro with HD 5770 GPU, and 2) 4.0 GHz i7-4790k with R9 280.

- The HD 5770 GPU handles only single-precision floats. The R9 280 GPU handles both single and double-precision floats.

- With the R9 280 GPU, clBLAS performance improves dramatically when the size of the input matrices go from 1024 x 1024 to 4096 x 4096. GPU performance is much worse than optimized CPU performance for 1024 x 1024 matrices. GPU performance varies from about the same to roughly 50% better than CPU performance for 4096 x 4096 matrices.

- With the older HD 5770 GPU, the performance of CPU and GPU are about the same for 1024 x 1024 matrices. The GPU performance is better than CPU performance for 4096 x 4096 matrices, especially for the

gemmfunction. - It is interesting to note that for 4096 x 4096 matrices, the GFLOPS for the

gemmfunction is always about double the GFLOPS for thecrossprodfunction. This suggests that the clBLAS implementation forsyrk(symmetric matrix multiplication) does not make the best optimization for the fact that it is only necessary to calculate the upper (or lower) half of the result.

crossprod

Mac Pro 2010 with HD 5770.

Single-precision.

| Type | 1024 x 1024 GFLOPS |

2048 x 2048 GFLOPS |

4096 x 4096 GFLOPS |

|---|---|---|---|

| C (vecLib) | 44.78 | 53.05 | 60.29 |

| clBLAS | 41.34 | 61.39 | 75.04 |

i7-4790k with R9 280.

Single-precision.

| Type | 1024 x 1024 GFLOPS |

2048 x 2048 GFLOPS |

4096 x 4096 GFLOPS |

|---|---|---|---|

| C (OpenBLAS) | 134.35 | 168.51 | 219.60 |

| clBLAS | 12.80 | 70.44 | 198.09 |

i7-4790k with R9 280.

Double-precision.

| Type | 1024 x 1024 GFLOPS |

2048 x 2048 GFLOPS |

4096 x 4096 GFLOPS |

|---|---|---|---|

| C (OpenBLAS) | 97.71 | 103.54 | 130.68 |

| clBLAS | 9.35 | 48.55 | 132.19 |

gemm

Mac Pro 2010 with HD 5770.

Single-precision.

| Type | 1024 x 1024 x 1024 GFLOPS |

2048 x 2048 x 2048 GFLOPS |

4096 x 4096 x 4096 GFLOPS |

|---|---|---|---|

| C (vecLib) | 53.69 | 59.24 | 66.33 |

| clBLAS | 58.04 | 105.40 | 156.71 |

i7-4790k with R9 280.

Single-precision.

| Type | 1024 x 1024 x 1024 GFLOPS |

2048 x 2048 x 2048 GFLOPS |

4096 x 4096 x 4096 GFLOPS |

|---|---|---|---|

| C (OpenBLAS) | 178.96 | 241.97 | 302.73 |

| clBLAS | 37.68 | 170.10 | 437.70 |

i7-4790k with R9 280.

Double-precision.

| Type | 1024 x 1024 x 1024 GFLOPS |

2048 x 2048 x 2048 GFLOPS |

4096 x 4096 x 4096 GFLOPS |

|---|---|---|---|

| C (OpenBLAS) | 165.19 | 184.73 | 185.23 |

| clBLAS | 35.20 | 154.77 | 313.07 |

install_name_toole.g., as described here.